Transformers - Why Self Attention calculate dot product of q and k from of same word? - Data Science Stack Exchange

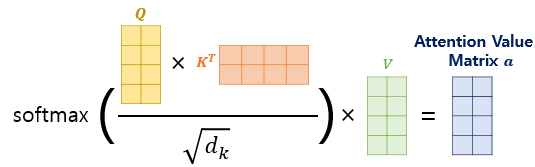

How to Implement Scaled Dot-Product Attention from Scratch in TensorFlow and Keras - MachineLearningMastery.com

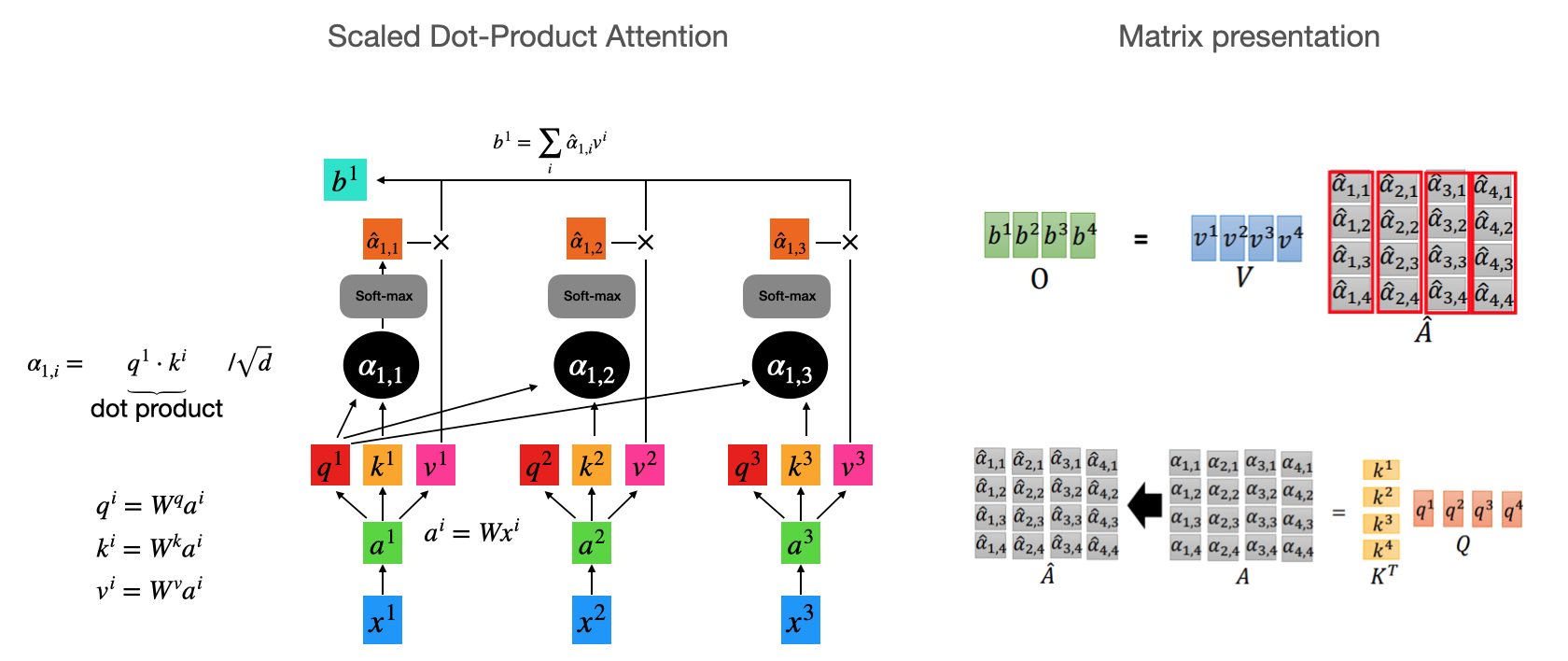

Attention model in Transformer. (a) Scaled dot-product attention model.... | Download Scientific Diagram

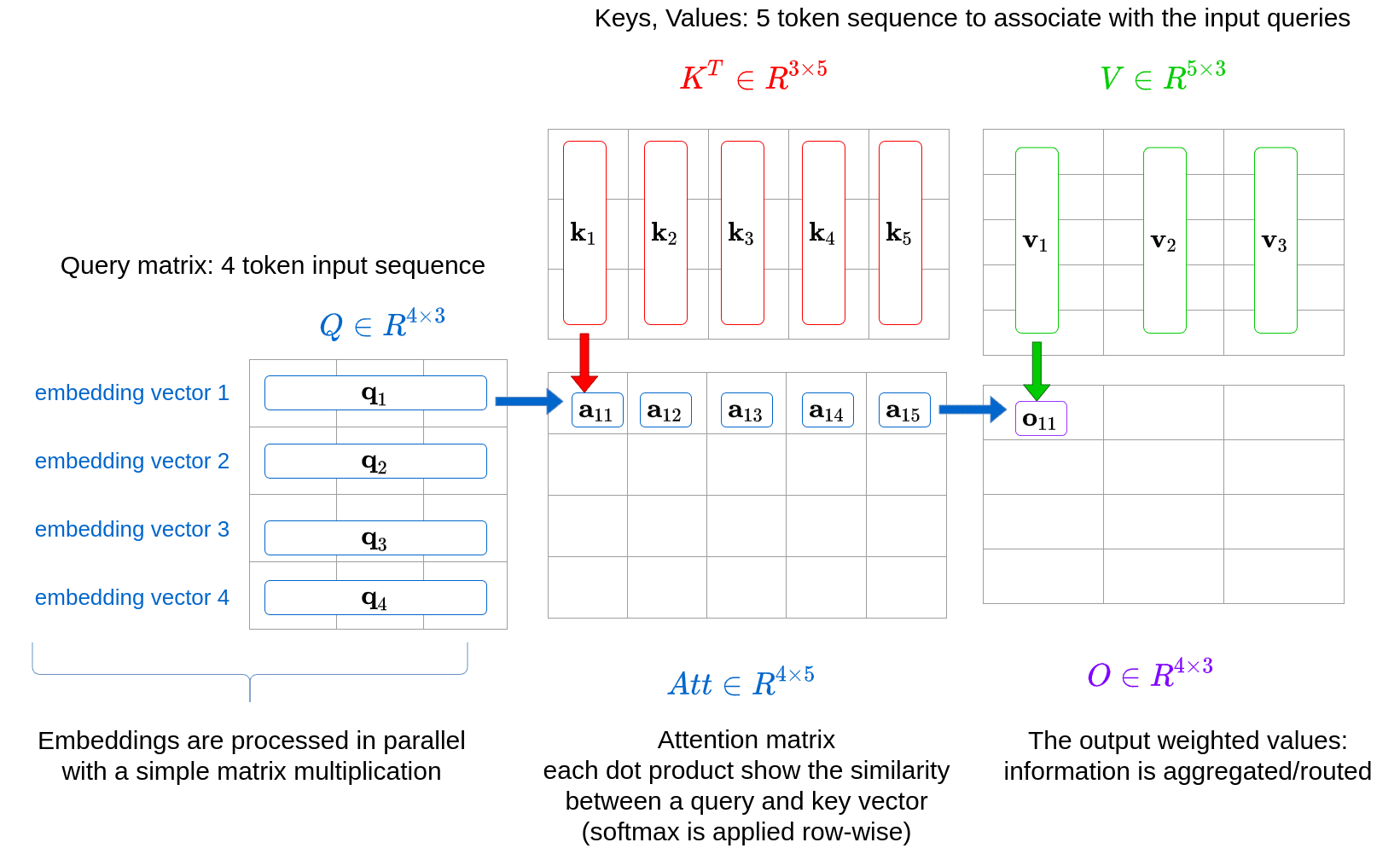

In Depth Understanding of Attention Mechanism (Part II) - Scaled Dot-Product Attention and Example | by FunCry | Feb, 2023 | Medium

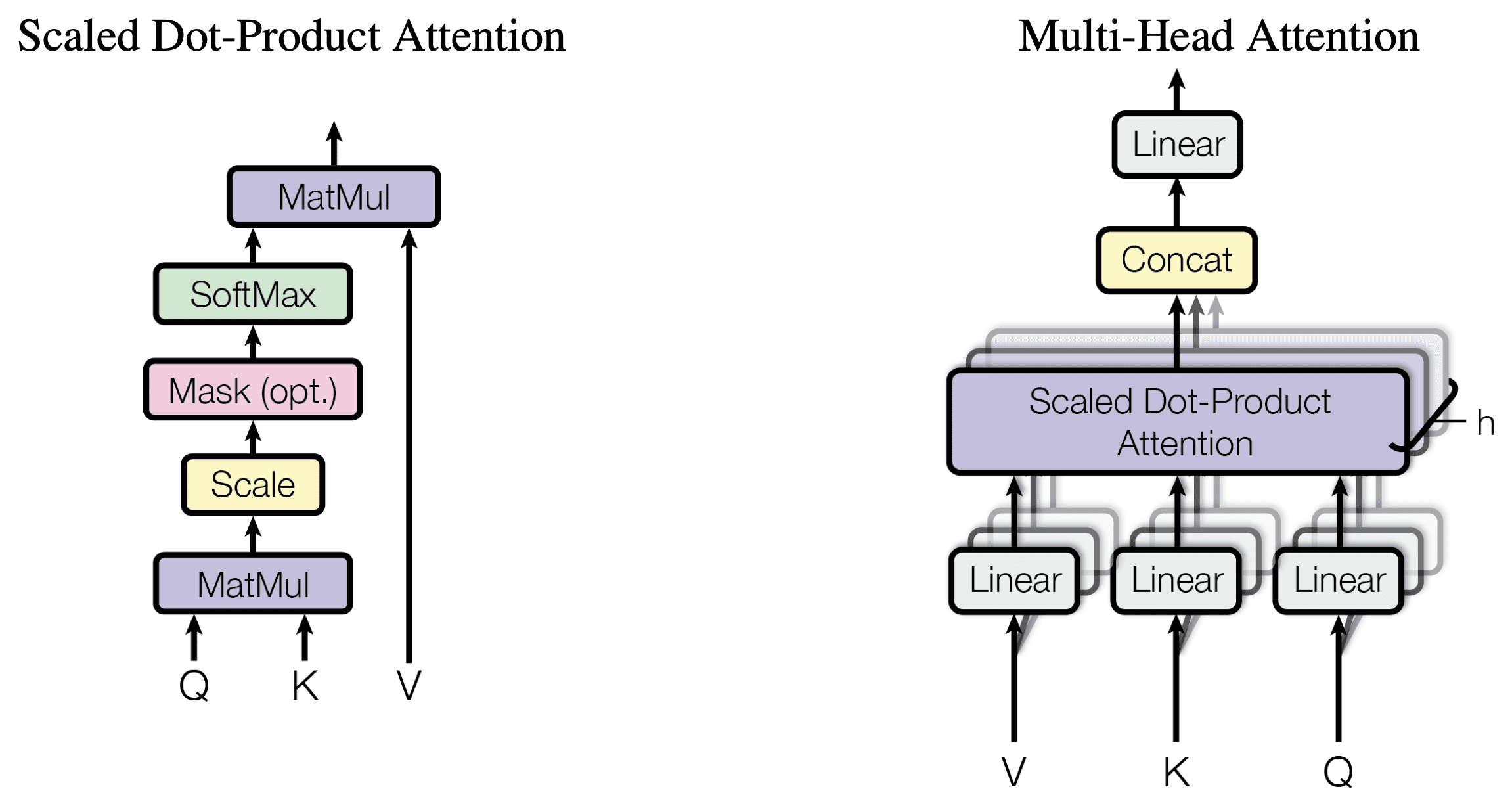

Illustration of the scaled dot-product attention (left) and multi-head... | Download Scientific Diagram

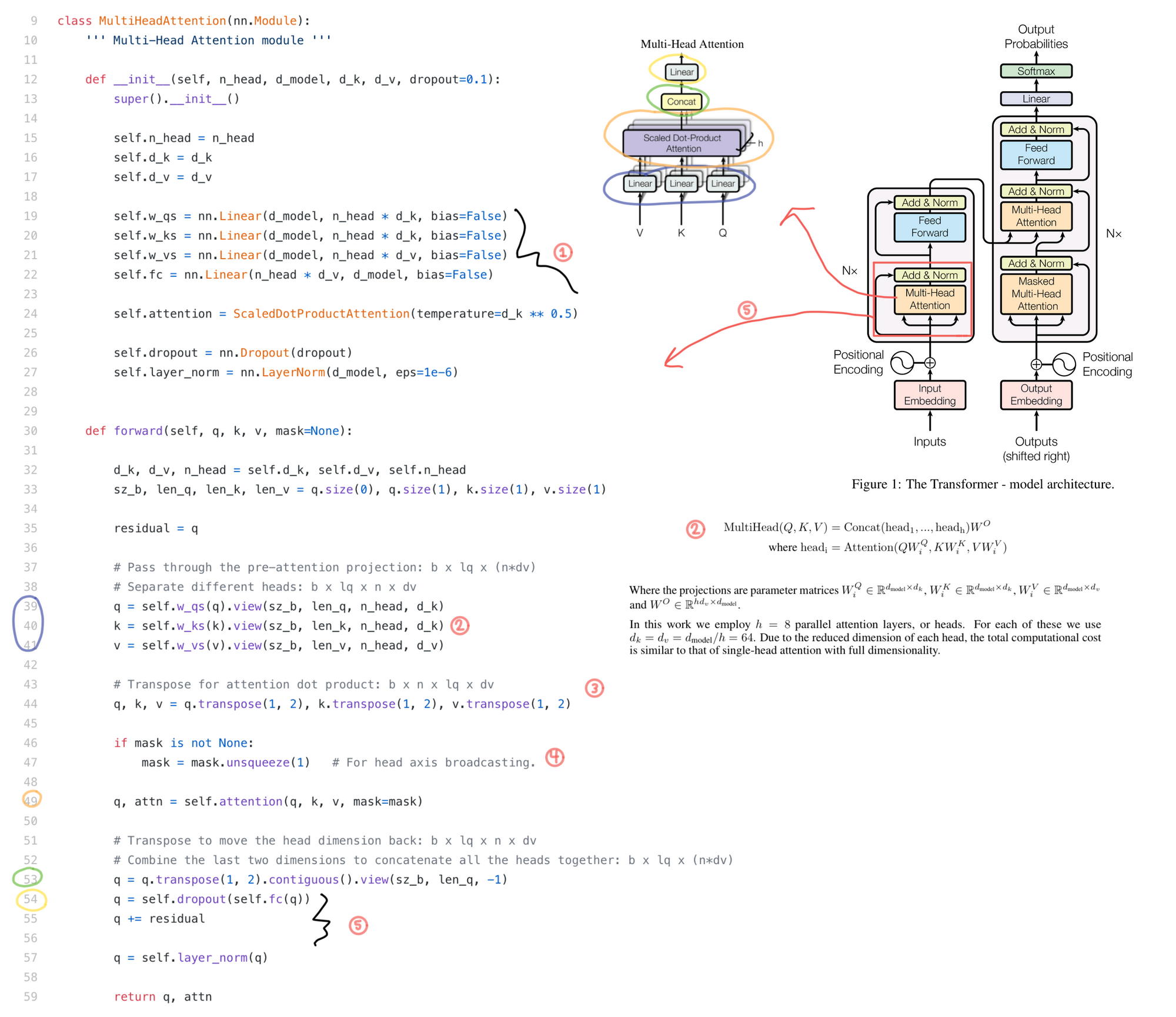

14.3. Multi-head Attention, deep dive_EN - Deep Learning Bible - 3. Natural Language Processing - English